Data masking is the act of transforming parts of data to maintain its anonymity. There are several types of data masking, set according to the level of anonymity needed versus the value needed from the data. For example, if the need for anonymity is high, then the data may be redacted to an empty string, whereas if the data need to be an identifier for statistical purposes, the data may be hashed.

In the past, data masking was done mainly by creating a redacted copy of the data—but with the growth of data processing and available cloud resources, dynamic data masking is growing in popularity. Dynamic masking means that a single copy of the data is stored and that the masking is done before serving the data to the data consumers.

Reasons for Masking Data

Data is masked for different reasons, which usually fall into one of the four categories:

Security. The main reason here is risk reduction, and according to guidelines set by security teams, to limit the possibility of a sensitive data leak.

Commercial. This kind of data masking is done for business reasons, such as masking of financial data that should not be common knowledge, even within the organization. This is guided by either the data owners of specific data sets or by other teams, such as those in charge of data governance.

Compliance. This kind deals with masking projects driven by requirements or recommendations based on specific standards, regulations, and frameworks (such as the NIST Cybersecurity Framework). The projects are usually initiated by data governance, GRC, or compliance teams.

Privacy. This is to make sure that the organization meets privacy regulations when handling personally identifiable information (PII). This is usually led by the privacy office or legal team.

Data Masking in AWS Redshift

When either using Amazon Web Services (AWS) Redshift as a standalone data warehouse solution or as a data processing engine for a data lake, it is commonly used in environments where sensitive data of different types are to be found. Masking would mean that different teams in the organization may get different redaction levels for the same source data sets.

For example, in a healthcare company, specific medical professionals will receive the medical information about the patients but will not get certain data like the patients’ email addresses, payment cards, or home addresses. On the other hand, the accounting teams may get a mirror of that they will get the personal information without the health information. Another team, such as analysts or data scientists, may get hashed data so they can run statistical predictions and analytics without being exposed to sensitive data, and so on.

We will discuss several ways of doing masking of AWS Redshift data, with or without Satori:

Redshift Static Masking

The first method of masking data may seem archaic, but in some use cases it may be the best to use. Static masking means that you’re keeping copies of the data in different levels of redaction and giving access to data consumers according to policies. The data can be stored in different columns in a specific table using Redshift’s column-based security (i.e., email and email_redacted) but are usually kept in different tables, or even in different databases. When you are giving access to the users, you grant access according to the sensitivity level required for the user.

The data in this method are redacted on-creation, and this is done in most cases either as part of the extract, transform, load (ETL) procedure or by parallel insertion of data (for example from a Kafka queue) to different destinations, with different detail levels.

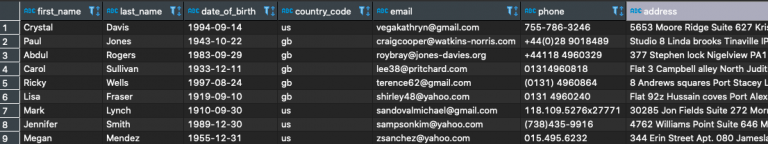

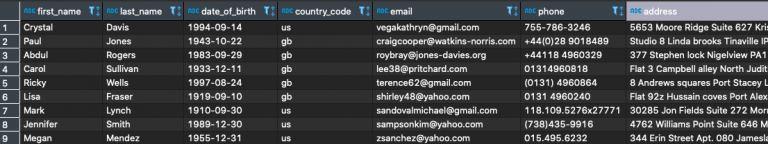

For example, if we have a customers table with the following records:

And we want to create a redacted version of the table with hashed names, unaffected country code, and emails with username replaced with the character “*”, leaving the domain name intact. We can do something along the lines of:

INSERT INTO redacted_customers (first_name, last_name, country_code, email) SELECT sha2(first_name, 256) AS first_name, sha2(last_name, 256) AS last_name, country_code, REGEXP_REPLACE(email, '[^@]+@', '*@') AS email FROM customers;

In many cases, especially where there are several use cases of the redacted data or a lot of sensitive data to redact, this has more cons than pros:

Depending on the specific way you create this, it may create delays in the data and a chance of inconsistencies between data sets handled by different teams.

This sometimes has a high operational cost, especially at scale. Even though storage is not very expensive, it still accumulates cost, and sometimes the compute and maintenance cost on each such ETL can grow over time.

Changes to In many cases, introducing new use cases or needs for masking of additional data, or differently creates a project that delays our time-to-value from the data.

These reasons make static masking less attractive to data teams nowadays and push for use of dynamic data masking.

Redshift Virtual Static Masking Using Views

Similarly, you can create a masked version of the data, without actually preparing the data ahead of time, by creating view overlays. This means that instead of putting the data in two places, you create a view that returns the filtered data and grant access to the view instead of to the source table.

With the same conditions as the previous example, it would look like this:

CREATE OR REPLACE VIEW redacted_customers AS SELECT sha2(first_name, 256) AS first_name, sha2(last_name, 256) AS last_name, country_code, REGEXP_REPLACE(email, '[^@]+@', '*@') AS email FROM customers;

This negates several of the issues we’ve had with truly static masking. We don’t have a delay in the time for the data to move to the masked view, and we don’t need to perform a lot of manual ETL work.

In terms of performance, the select query will need to run the masking functions (in this case sha2 and regexp_replace) on each query, so in a scenario with many selects, it may have a performance impact. Besides, since this is a view, you will need a workaround for use cases where data update is needed. Finally, making a copy for each masking type for each table may be hard to scale, which may call for dynamic masking.

Redshift Dynamic Masking

The first method of masking data may seem archaic, but in some use cases it may be the best to use. Static masking means that you’re keeping copies of the data in different levels of redaction and giving access to data consumers according to policies. The data can be stored in different columns in a specific table using Redshift’s column-based security (i.e., email and email_redacted) but are usually kept in different tables, or even in different databases. When you are giving access to the users, you grant access according to the sensitivity level required for the user.

The data in this method are redacted on-creation, and this is done in most cases either as part of the extract, transform, load (ETL) procedure or by parallel insertion of data (for example from a Kafka queue) to different destinations, with different detail levels.

For example, if we have a customers table with the following records:

And we want to create a redacted version of the table with hashed names, unaffected country code, and emails with username replaced with the character “*”, leaving the domain name intact. We can do something along the lines of:

INSERT INTO redacted_customers (first_name, last_name, country_code, email) SELECT sha2(first_name, 256) AS first_name, sha2(last_name, 256) AS last_name, country_code, REGEXP_REPLACE(email, '[^@]+@', '*@') AS email FROM customers;

In many cases, especially where there are several use cases of the redacted data or a lot of sensitive data to redact, this has more cons than pros:

Depending on the specific way you create this, it may create delays in the data and a chance of inconsistencies between data sets handled by different teams.

This sometimes has a high operational cost, especially at scale. Even though storage is not very expensive, it still accumulates cost, and sometimes the compute and maintenance cost on each such ETL can grow over time.

Changes to In many cases, introducing new use cases or needs for masking of additional data, or differently creates a project that delays our time-to-value from the data.

These reasons make static masking less attractive to data teams nowadays and push for use of dynamic data masking.

Read more about dynamic masking in our complete guide to dynamic data masking.

Dynamic Universal Masking Using Satori

This article would not be complete without mentioning the Satori Universal Masking feature, which can be used when your Redshift is integrated with Satori. Universal Masking takes a further step into ease of management, and allows you to set complete masking profiles in one central location, as well as apply these masking profiles globally:

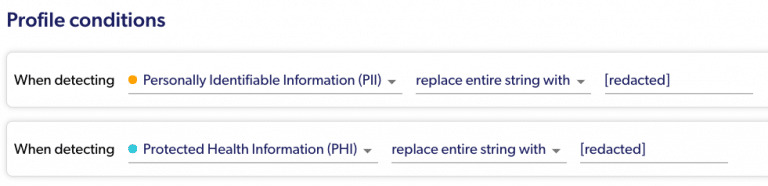

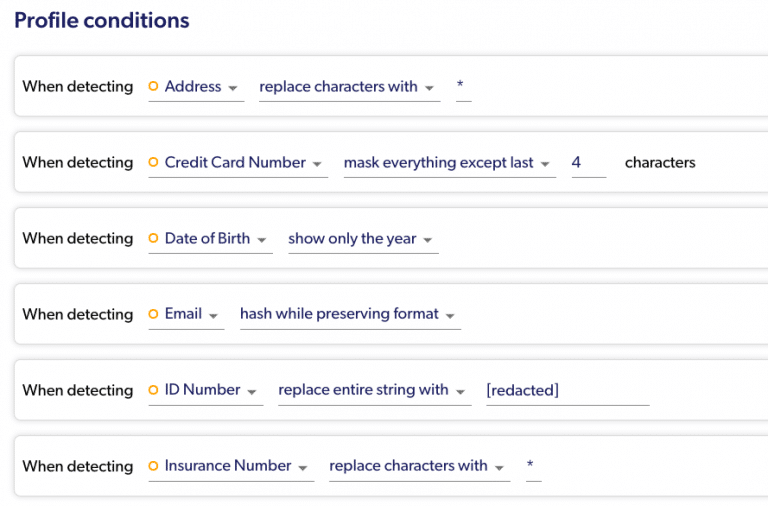

The profiles can be as generic as “mask anything that’s PII,” or as granular as setting a specific action for each data type.

Simple masking conditions

More granular masking conditions

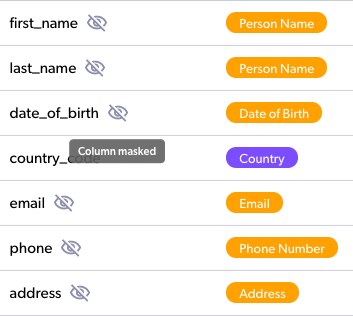

You can apply masking based on Redshift Users, identity provider (IdP) Groups, or even Data Directory Groups!

You can be as granular as setting the policy on specific columns (or even only for specific rows!), but you can also set them on several tables at once, on a complete schema or database, or even on your entire account with one policy.

You will get details about the actual masking performed in your audit screens.

Static Masking | Virtual Static Masking using Views | Dynamic Masking using Views | Satori Universal Masking for Redshift | |

|---|---|---|---|---|

ETL | Yes | No, but configuration per table+redaction is a must | No, but configuration per table is a must | No |

DB Performance impact on reading data | No | Yes | Yes | No |

Configuration time per object | Long | Long | Long |

Short (optional per object) |

Row Level Masking | Yes | Yes | Yes | Yes |

Column Level Masking | Yes | Yes | Yes | Yes |

Policy Support

| No | No | No | No |

Is Data Classification a Prerequisite

| Yes | Yes | Yes | No (Data is automatically classified) |

Extended to other platforms (non-Redshift) | No | No | No | Yes |

Applies to new tables and columns | No | No | No | Yes |

Management via code | Yes | Yes | Yes | Yes |

Management via UI | No | No | No | Yes |