How Do You Benchmark AWS Redshift Performance?

Amazon Redshift is a fully-managed data warehouse service, designed especially for analytics workloads. It integrates with business intelligence tools and standard SQL-based clients. Redshift offers fast I/O and query performance for any dataset size. This is made possible by the use of columnar storage technology in combination with parallel queries distributed across multiple nodes.

While Redshift is a high performance system by design, like any database, there is a need to optimize workloads and queries to get optimal performance. However, before you can optimize Redshift performance, you need to be aware of its unique performance and scalability features.

This article will briefly review Redshift’s high performance architecture, show you how to build a reliable benchmark test for your Redshift workloads, and provide a few techniques for tuning Redshift performance.

This is part of our series of articles about Redshift security.

In this article, you will learn:

- Amazon Redshift Speed and Scalability

- Improved Compression

- Enhanced Query Planning

- Dynamic Scaling

Scaling with Mixed Workloads - Price Performance

- Ongoing Performance Optimization

- Building High Quality Benchmark Tests for AWS Redshift

- Prefer to Run Test Machines in the Cloud

- Number of Test Iterations

- Query Execution Time, Runtime, and Throughput

- Pause, Resume, and Snapshots

- Cluster Resize

- Performance Tuning Techniques for Amazon Redshift

- Leverage Elastic Resizing and Concurrency Scaling

- Use the Amazon Redshift Advisor

- Using Auto WLM with Priorities to Increase Throughput

- Leverage Federated Queries

Amazon Redshift Speed and Scalability

Amazon Redshift offers several technologies to speed up operations and scale as needed, including machine learning (ML) algorithms that automatically optimize performance. Additionally, Redshift offers improved compression, enhanced query planning, and tools for measuring costs and performance.

Improved Compression

Amazon Redshift uses a compression standard called AZ64 to boost query performance. This compression algorithm is 35% more efficient, in terms of compression ratio, and 40% faster than the traditional Lempel–Ziv–Oberhumer (LZO) lossless compression algorithm. To achieve this, AZ64 compresses small groups of data values and uses advanced parallel processing techniques.

Enhanced Query Planning

The query planner can help you discover the quickest ways to process a certain query. To do this, the planner evaluates the costs associated with different query plans. Additionally, the planner factors in additional considerations, including the network stack. It uses algorithms such as HyperLogLog (HLL) to generate improved statistics about query execution, enabling higher accuracy predictions.

Dynamic Scaling

Redshift offers several options for scalability. Concurrency Scaling, for example, enables the data warehouse to handle spikes in load while maintaining consistent SLAs. This is achieved by elastically scaling underlying resources according to actual needs. Redshift continuously monitors your workloads and can automatically add transient cluster capacity to meet peaks in demand. It can also route requests to new clusters and ensure transient capacity is made available within seconds of the request. Once the capacity is no longer needed, Redshift removes it.

Scaling with Mixed Workloads

Running multiple mixed workloads can be a complex operation. Redshift offers several options that can help you efficiently scale without wasting time on repetitive tasks. For example, you can use the automatic workload management (WLM) and query priorities features to scale Redshift to deal with unpredictable workloads, and prioritize queries to serve urgent requests.

Price Performance

Price performance is defined in terms of the compute work performed in exchange for a certain hourly price. Redshift optimizes price performance, managing costs and moderating increases to support more users. Redshift becomes more efficient and costs grow by less as your user base grows. This is a unique advantage of Redshift, compared to other data warehouses that increase linearly in cost when more users are added.

Ongoing Performance Optimization

AWS measures several aspects on a nightly basis, including performance, throughput, and price-performance. Additionally, AWS regularly runs large and comprehensive benchmarks, like the Cloud DW benchmark, testing scenarios that extend beyond the current needs of AWS customers, and optimizing Redshift accordingly.

Building High Quality Benchmark Tests for AWS Redshift

Here are a few guidelines for benchmarking your Amazon Redshift workloads.

Prefer to Run Test Machines in the Cloud

Benchmark environments can vary significantly between use cases. However, there are two requirements needed for any benchmark test—a test machine, where you initiate the benchmark test, and a running Redshift cluster that serves as the target.

For the host, you can use a remote laptop, an on-prem server, or a cloud resource. Traditionally, on-prem servers and remote laptops were used as benchmarking hosts. However, this type of host can introduce noise into the test, in the form of Internet transmission times, VPN connectivity, or insufficient memory and CPU utilization.

You might get better results by hosting your benchmark test in the cloud. For example, you can use a new Amazon Elastic Compute Cloud (Amazon EC2) instance as the host. You can choose from a wide range of instance types, but be sure to choose one with sufficient vCPUs and memory. To ensure speed is optimal, you should install and launch benchmark scripts and tools from a locally attached EC2 storage and not an attached EBS volume.

Number of Test Iterations

One test is not enough to get trustworthy results. To ensure accuracy, you should conduct at least four test iterations. These should include one warm-up iteration—which is designed to help prime the Redshift cluster—and three subsequent test iterations. This is important to ensure the compile cache is not empty.

Redshift compiles queries to machine code. In order to achieve fast query performance, the majority of real-world queries (more than 99.6%) are cached as machine code, stored in the Redshift compile cache, and thus do not require compilation. This means that if you run a test without warm-up, you will not see the performance improvement provided by the compile cache.

Query Execution Time, Runtime, and Throughput

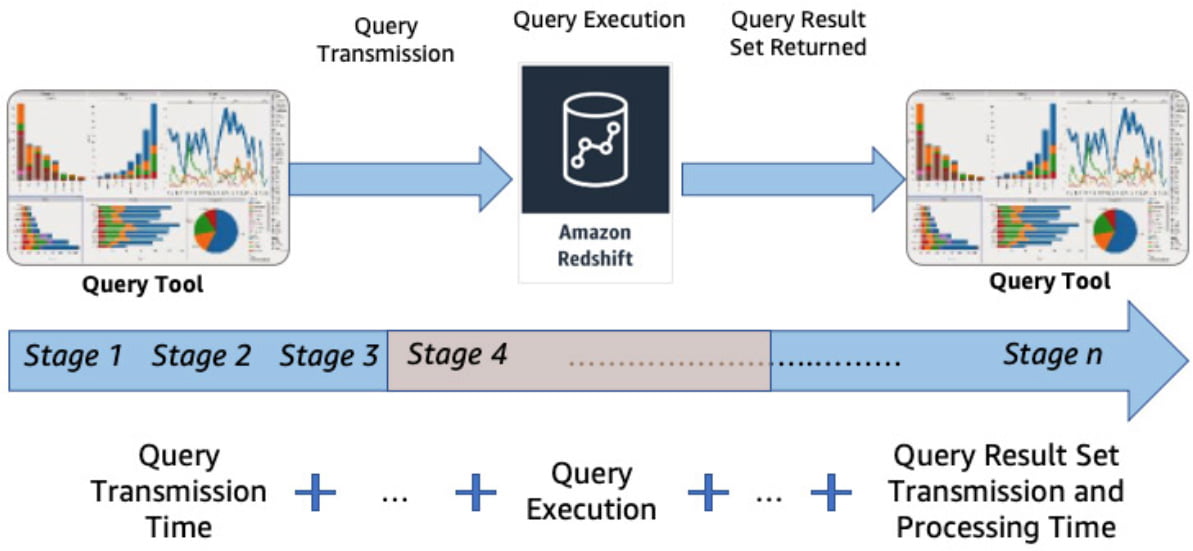

Benchmark tests should, ideally, measure the performance of query processing under different conditions. This involves varying common metrics, like query execution time, query throughput, and query runtime, across the query lifecycle.

The below diagram visualizes a basic query lifecycle.

Image Source: AWS

Benchmark tests are typically expected to eliminate noise—components that go beyond the scope of the operation. For example, Redshift cannot control network transmission speeds, so these metrics should be eliminated from benchmark tests designed to measure query processing performance.

Conserve Budget During Tests

Benchmark tests can be simple and short, running only for a few hours. However, there are many times when you might need to run a long test for days or weeks. Costs can run high during these tests, but there are several actions you can take to keep your budget lean.

Here are several best practices that can keep your budget lean when running a Redshift benchmark test:

- Pause—to keep costs within a reasonable budget, you should pause clusters when they are not needed for a duration of more than several consecutive hours. For example, overnight or on weekends.

- Resume—you should also keep clusters allocated for benchmark testing available even after the test is complete. This way the clusters remain available if additional benchmark testing scenarios are required.

- Snapshot—before deleting your Redshift cluster, create a final snapshot. This is a cost-effective way to preserve the availability of this cluster for future tests.

Cluster Resize

Because Redshift scales dynamically, in order to comprehensively test Redshift performance, you should test different cluster sizes. It is common to double the nodes in a cluster to see how this affects performance. Redshift offers two options for resizing your cluster as part of a benchmark:

- Classic resize—doubles the number of slices, providing more parallelization. Each slice has the standard CPU and memory capacity.

- Elastic resize—can resize Redshift clusters within minutes. This option redistributes the existing slices of the cluster across more cluster nodes. Since there are less slices per node, each slice gets more computing resources.

Performance Tuning Techniques for Amazon Redshift

After you perform your benchmark and identify performance issues, you can use these guidelines to improve performance for your Redshift cluster.

Leverage Elastic Resizing and Concurrency Scaling

On-premises workloads require you to estimate, in advance, system requirements to ensure you build enough capacity to meet demand. Cloud-based clusters, on the other hand, enable you to right-size resources as needed. Redshift extends this ability by offering concurrency scaling and elastic resizing.

- Elastic resizing lets you quickly decrease or increase the number of compute nodes, and also double or halve the original node count of the cluster. You can also change node types, by using classic resize. Note that classic resize is slower.

- Concurrency scaling enables your Redshift cluster to dynamically add capacity in response to changing demand.

Use the Amazon Redshift Advisor

The Redshift Advisor provides recommendations that can help you improve the performance of your Redshift cluster and decrease overall operating costs. The advisor observes performance statistics and operations data and then provides data-driven recommendations. These observations are developed by running tests on your cluster.

Using Auto WLM with Priorities to Increase Throughput

Redshift uses its queuing system (WLM) to run queries, letting you define up to eight queues for separate workloads. Auto WLM can help simplify workload management and maximize query throughput. To do this, it uses machine learning (ML) to dynamically manage concurrency and memory for each workload. This ensures optimal utilization of your cluster resources.

Leverage Federated Queries

The federated query feature lets you run analytics directly against live data that resides on your Amazon S3 data lake and OLTP source system databases, without performing ETL and ingesting source data into Redshift tables. Instead of using micro-ETL batch ingestion of data into the Redshift, you can leverage this feature to provide real-time data visibility on operational reports.